Using minimize#

We have already used the minimize function a few times:

from scipy.optimize import minimize

Now we pause to look at how it works, and how best to use it.

Let’s go back to the problem from finding lines:

# Our usual imports and configuration.

import numpy as np

import pandas as pd

pd.set_option('mode.copy_on_write', True)

import matplotlib.pyplot as plt

# Make plots look a little bit more fancy

plt.style.use('fivethirtyeight')

We used the students ratings dataset dataset.

Download the data file via

rate_my_course.csv.

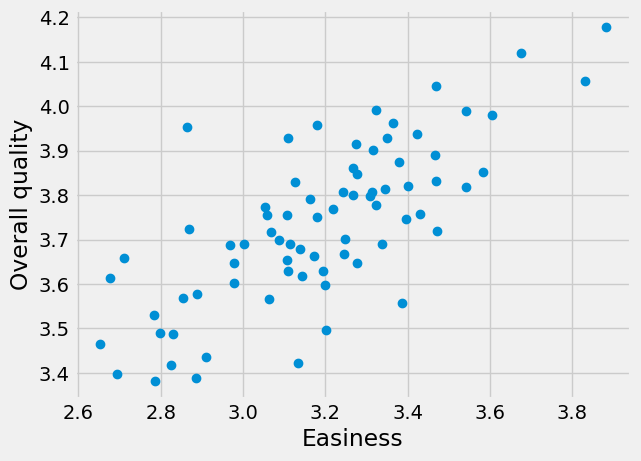

We were looking for the best slope to relate the Easiness ratings to the Overall Quality ratings.

# Read the data file, get columns as arrays.

ratings = pd.read_csv('rate_my_course.csv')

easiness = np.array(ratings['Easiness'])

quality = np.array(ratings['Overall Quality'])

plt.plot(easiness, quality, 'o')

plt.xlabel('Easiness')

plt.ylabel('Overall quality')

Text(0, 0.5, 'Overall quality')

Here is the function we used to calculate root means squared error (RMSE),

adapted for minimize.

def calc_rmse_for_minimize(c_s):

# c_s has two elements, the intercept c and the slope s.

c = c_s[0]

s = c_s[1]

predicted_quality = c + easiness * s

errors = quality - predicted_quality

return np.sqrt(np.mean(errors ** 2))

We called minimize to find the best intercept and slope:

min_res = minimize(calc_rmse_for_minimize, [2.25, 0.47])

min_res

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

The result of minimize#

Notice the thing that minimize returns is a special kind of thing that stores

information about the result:

# The value that comes back is a special thing to contain minimize results:

type(min_res)

scipy.optimize._optimize.OptimizeResult

Among the interesting thing that the results contain is the attribute fun.

This value is the final minimal value of the function we are trying to minimize

— here the RMSE given an intercept and slope:

# The minimal value that `minimize` could find for our function.

min_res.fun

0.11930057876222537

We also have an array with the values for the intercept and slope that give the minimal value:

min_res.x

array([2.11475042, 0.50887914])

We confirm that min_res.fun is indeed the value we get from our function given the intercept, slope array in min_res.x:

calc_rmse_for_minimize(min_res.x)

0.11930057876222537

The function to minimize#

calc_rmse_for_minimize is a function:

type(calc_rmse_for_minimize)

function

We pass the function to minimize as an argument for it to use.

We can call the function we pass to minimize — the objective function — in

the sense that our objective is to minimize this function.

How is minimize using our objective function? Clearly minimize is

calling the function, but what arguments is it

sending? How many times does it call the function?

To find out, we can stick something inside the objective function to tell us each time it is called:

def rmse_func_for_info(c_s):

# Print the argument that minimize sent.

print('Called with', c_s, 'of type', type(c_s))

# The rest of the function is the same as the original above.

# c_s has two elements, the intercept c and the slope s.

c = c_s[0]

s = c_s[1]

predicted_quality = c + easiness * s

errors = quality - predicted_quality

return np.sqrt(np.mean(errors ** 2))

The we call minimize with the new objective function:

min_res = minimize(rmse_func_for_info, [2.25, 0.47])

min_res

Called with [2.25 0.47] of type <class 'numpy.ndarray'>

Called with [2.25000001 0.47 ] of type <class 'numpy.ndarray'>

Called with [2.25 0.47000001] of type <class 'numpy.ndarray'>

Called with [2.15529163 0.19056832] of type <class 'numpy.ndarray'>

Called with [2.15529164 0.19056832] of type <class 'numpy.ndarray'>

Called with [2.15529163 0.19056833] of type <class 'numpy.ndarray'>

Called with [2.24765108 0.46306966] of type <class 'numpy.ndarray'>

Called with [2.2476511 0.46306966] of type <class 'numpy.ndarray'>

Called with [2.24765108 0.46306967] of type <class 'numpy.ndarray'>

Called with [2.24898418 0.46700288] of type <class 'numpy.ndarray'>

Called with [2.24898419 0.46700288] of type <class 'numpy.ndarray'>

Called with [2.24898418 0.46700289] of type <class 'numpy.ndarray'>

Called with [2.24209018 0.46915035] of type <class 'numpy.ndarray'>

Called with [2.24209019 0.46915035] of type <class 'numpy.ndarray'>

Called with [2.24209018 0.46915036] of type <class 'numpy.ndarray'>

Called with [2.21451417 0.47774023] of type <class 'numpy.ndarray'>

Called with [2.21451418 0.47774023] of type <class 'numpy.ndarray'>

Called with [2.21451417 0.47774025] of type <class 'numpy.ndarray'>

Called with [2.13294898 0.50319907] of type <class 'numpy.ndarray'>

Called with [2.132949 0.50319907] of type <class 'numpy.ndarray'>

Called with [2.13294898 0.50319909] of type <class 'numpy.ndarray'>

Called with [2.11475042 0.50887914] of type <class 'numpy.ndarray'>

Called with [2.11475043 0.50887914] of type <class 'numpy.ndarray'>

Called with [2.11475042 0.50887916] of type <class 'numpy.ndarray'>

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

This shows that:

minimizecalls our function multiple times, as it searches for the values of intercept and slope giving the minimum RMSE.At each call, it passes a single argument that is an array containing the two values (intercept and slope).

Looking carefully, we see signs that minimize is trying small changes in the

slope or intercept, presumably to calculate the gradient — as we saw in the

optimization page — but that is not our concern here.

The values in the array that minimize passes are the parameters that

minimize is trying to optimize — in our case, the intercept and slope. Call

this the parameter array.

Tricks for using minimize#

Unpacking#

Near the top of our function, we have these two lines of code:

c = c_s[0]

s = c_s[1]

This is where we take set the intercept from the first value of the parameter array, and the slope from the second.

It turns out there is neat and versatile way of doing this in Python, called unpacking. Consider this array:

c_s = np.array([2, 1])

We can unpack these two values into variables like this:

# Unpacking!

c, s = c_s

The right hand side contains two values (in an array). The left hand side has two variable names, separated by a comma. Python take the two values from the right hand side, and puts them into the variables on the left:

print('c is', c)

print('s_is', s)

c is 2

s_is 1

The thing on the right hand side can be anything that is a sequence of two things. For example, it can also be a list:

my_list = [6, 7]

my_var1, my_var2 = my_list

print('my_var1 is', my_var1)

print('my_var2 is', my_var2)

my_var1 is 6

my_var2 is 7

There can be three or four or any other number of variables on the left, as long as there is a matching number of elements in the thing on the right:

list2 = [10, 100, 10000]

w, x, y = list2

print('w is', w)

print('x is', x)

print('y is', y)

w is 10

x is 100

y is 10000

The number of elements must match the number of variables:

# Error - three variables on the left, two elements on the right.

p, q, r = [1, 2]

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[18], line 2

1 # Error - three variables on the left, two elements on the right.

----> 2 p, q, r = [1, 2]

ValueError: not enough values to unpack (expected 3, got 2)

Using unpacking in minimize#

Unpacking has two good uses with minimize. The first is that we can make our function to minimize a little neater:

def rmse_with_unpacking(c_s):

# c_s has two elements, the intercept c and the slope s.

# Use unpacking!

c, s = c_s

predicted_quality = c + easiness * s

errors = quality - predicted_quality

return np.sqrt(np.mean(errors ** 2))

The second is, we can use unpacking to — er — unpack the best fit intercept and

slope from the result of minimize. Remember, minimize returns a result

value, that includes an array x with the parameters minimizing our function:

m_r = minimize(rmse_with_unpacking, [2.25, 0.47])

m_r

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

We can get the best-fit intercept and slope values by unpacking:

best_c, best_s = m_r.x

print(best_c)

print(best_s)

2.114750415469181

0.5088791428878439

Other arguments to our function#

At the moment, using minimize is a little inconvenient, because we have to make a separate cost (objective) function for each set of x and y values.

Here is our new, neater cost function to find the best slope and intercept for easiness and quality:

def cost_easy_quality(c_s):

# c_s has two elements, the intercept c and the slope s.

c, s = c_s

predicted_quality = c + easiness * s

errors = quality - predicted_quality

return np.sqrt(np.mean(errors ** 2))

We find the intercept and slope — and get the usual values.

# Show the best intercept and slope for easiness and quality.

minimize(cost_easy_quality, [2.25, 0.47]).x

array([2.11475042, 0.50887914])

Now let’s imagine we are interested in the relationship of easiness and clarity:

clarity = np.array(ratings['Overall Quality'])

clarity[:10]

array([3.79136358, 3.56686702, 3.65764056, 3.9009491 , 3.77374607,

3.46548462, 3.87501873, 3.6633317 , 3.75619677, 3.43503791])

Notice that our cost_easy_quality function uses the top-level notebook

variables easiness and quality within the function. It can only work on

these variables, and no others. So, if we want to get the slope and intercept

for easiness and clarity, instead of easiness and quality, we have to

write another almost-identical function, like this:

def cost_easy_clarity(c_s):

# c_s has two elements, the intercept c and the slope s.

c, s = c_s

predicted_clarity = c + easiness * s

errors = clarity - predicted_clarity

return np.sqrt(np.mean(errors ** 2))

# Show the best intercept and slope for easiness and clarity.

minimize(cost_easy_clarity, [2.25, 0.47]).x

array([2.11475042, 0.50887914])

How can we avoid writing such near-identical functions for each set of x and y values for which we want a best-fit line?

Generalizing the cost-function with the args argument#

Inspect the help for minimize with minimize?. You will notice that there

is an argument we can pass to minimize called args. These are “Extra

arguments passed to the objective function”. In fact, this is very useful to

make our objective function more general. First we try this silly use of

args where we will pass a couple of useless bits of text to our objective

function:

def rmse_with_extra_args(c_s, v1, v2):

# An objective function with some useless extra arguments.

print('c_s is', c_s, '; v1 is', v1, '; v2 is', v2)

c, s = c_s

predicted_quality = c + easiness * s

errors = quality - predicted_quality

return np.sqrt(np.mean(errors ** 2))

Now we tell minimize to pass some value for v1 and v2 for every call to

the objective function. We do that by passing v1 and v2 inside a tuple. A

tuple is a data type in Python that is much like a list, in that it contains

sequences of values, we create tuples using parentheses () rather than square

brackets.

Here is our argument tuple:

v1 = 'Doctor'

v2 = 'Strange'

# Make the argument tuple. Notice the parentheses.

extras = (v1, v2)

extras

('Doctor', 'Strange')

Here we pass the argument tuple to minimize, to ask minimize to pass these

extra arguments to the objective function, each time it calls the function:

# args has two values, one that becomes "v1" and the other that becomes "v2".

minimize(rmse_with_extra_args, [2.25, 0.47], args=extras)

c_s is [2.25 0.47] ; v1 is Doctor ; v2 is Strange

c_s is [2.25000001 0.47 ] ; v1 is Doctor ; v2 is Strange

c_s is [2.25 0.47000001] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529163 0.19056832] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529164 0.19056832] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529163 0.19056833] ; v1 is Doctor ; v2 is Strange

c_s is [2.24765108 0.46306966] ; v1 is Doctor ; v2 is Strange

c_s is [2.2476511 0.46306966] ; v1 is Doctor ; v2 is Strange

c_s is [2.24765108 0.46306967] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898418 0.46700288] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898419 0.46700288] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898418 0.46700289] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209018 0.46915035] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209019 0.46915035] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209018 0.46915036] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451417 0.47774023] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451418 0.47774023] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451417 0.47774025] ; v1 is Doctor ; v2 is Strange

c_s is [2.13294898 0.50319907] ; v1 is Doctor ; v2 is Strange

c_s is [2.132949 0.50319907] ; v1 is Doctor ; v2 is Strange

c_s is [2.13294898 0.50319909] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475042 0.50887914] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475043 0.50887914] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475042 0.50887916] ; v1 is Doctor ; v2 is Strange

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

To be more compact, we can assemble and pass the args argument in one go:

# Compiling and passing args in one line.

minimize(rmse_with_extra_args, [2.25, 0.47], args=('Doctor', 'Strange'))

c_s is [2.25 0.47] ; v1 is Doctor ; v2 is Strange

c_s is [2.25000001 0.47 ] ; v1 is Doctor ; v2 is Strange

c_s is [2.25 0.47000001] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529163 0.19056832] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529164 0.19056832] ; v1 is Doctor ; v2 is Strange

c_s is [2.15529163 0.19056833] ; v1 is Doctor ; v2 is Strange

c_s is [2.24765108 0.46306966] ; v1 is Doctor ; v2 is Strange

c_s is [2.2476511 0.46306966] ; v1 is Doctor ; v2 is Strange

c_s is [2.24765108 0.46306967] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898418 0.46700288] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898419 0.46700288] ; v1 is Doctor ; v2 is Strange

c_s is [2.24898418 0.46700289] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209018 0.46915035] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209019 0.46915035] ; v1 is Doctor ; v2 is Strange

c_s is [2.24209018 0.46915036] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451417 0.47774023] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451418 0.47774023] ; v1 is Doctor ; v2 is Strange

c_s is [2.21451417 0.47774025] ; v1 is Doctor ; v2 is Strange

c_s is [2.13294898 0.50319907] ; v1 is Doctor ; v2 is Strange

c_s is [2.132949 0.50319907] ; v1 is Doctor ; v2 is Strange

c_s is [2.13294898 0.50319909] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475042 0.50887914] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475043 0.50887914] ; v1 is Doctor ; v2 is Strange

c_s is [2.11475042 0.50887916] ; v1 is Doctor ; v2 is Strange

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

Notice that, each time minimize calls rmse_with_extra_args it passes the

first value of args as the second argument to the objective function (v1)

and the second value of args as the third argument to the objective function

(v2).

args is the solution to our problem of near-identical functions for different

x and y values - we can use args to pass the x and y values for our

function to work on:

def rmse_any_line(c_s, x_values, y_values):

c, s = c_s

predicted = c + x_values * s

errors = y_values - predicted

return np.sqrt(np.mean(errors ** 2))

Now we can use the same objective function for any pair of x and y values:

minimize(rmse_any_line, [2.25, 0.47], args=(easiness, quality))

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8

minimize(rmse_any_line, [2.25, 0.47], args=(easiness, clarity))

message: Optimization terminated successfully.

success: True

status: 0

fun: 0.11930057876222537

x: [ 2.115e+00 5.089e-01]

nit: 4

jac: [-2.442e-06 1.345e-06]

hess_inv: [[ 1.773e+01 -5.527e+00]

[-5.527e+00 1.735e+00]]

nfev: 24

njev: 8